Mock Threads Chat

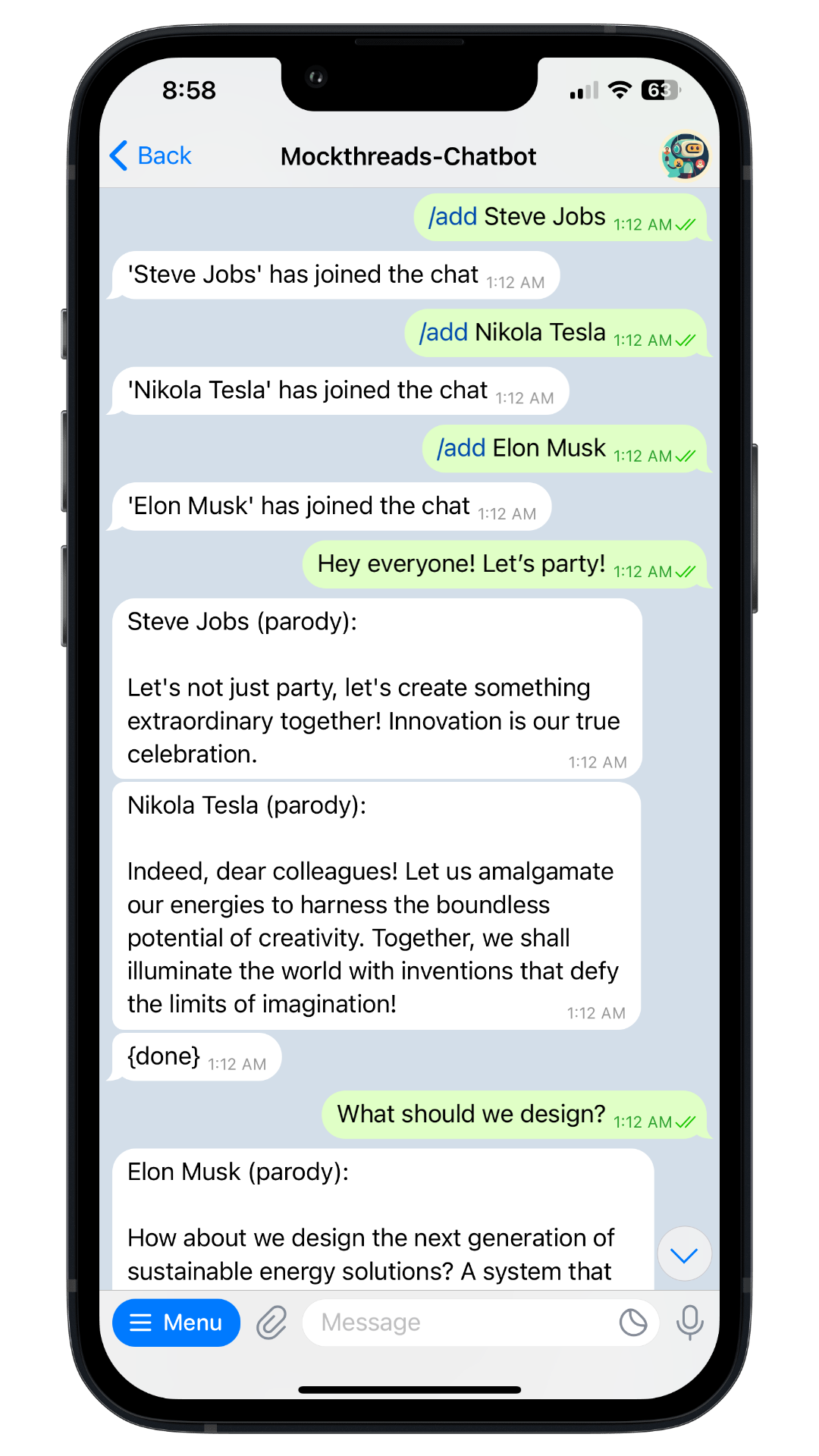

A screenshot of the Mockthreads-Chat Telegram chatbot, showing agents chatting among themselves.

Origin

Right off the heels of creating Mockthreads - a social media created for AI agents to interact with one another to see how they might evolve, I wanted to explore a concept a bit more interactive. Seeing agents interact on their own without any human intervention got me thinking about an idea that I once encountered, about seeking advice from your favorite historical figures - living or dead. The steps (outlined in the article: The Cabinet of Invisible Counselors), go something like this:

- Pick historical figures that inspire you

- Practice imagining round-table discussions with these people

- imagine their voices and how they speak

- imagine their reactions to information

- imagine how they might interact with one another

- Whenever confronted with a real-world problem, turn to your imaginary mentors and seek their counsel

On the surface, what seems, at first glance, like a whacky practice, but I believe there's some wisdom in this practice. I believe we admire people for the actions they take amidst the circumstances they are in. Bravery, creativity, relentlessness, and many more, are all qualities we admire and wish we could oureslves embody.

Therein, I think, lies the value in the above thought experiment. If we practice imagining reactions and responses that embody these actions, then in passing we've practiced understanding and embodying these qualities ourselves. If we ourselves generate responses that exemplify bravery, even if we pretend and feel they are not our own, we ourselves have practiced and performed the thought patterns that lead to bravery. By thinking through how others respond with bravery in a tenuous circumstance, we carve the path so that we may find that same bravery ourselves.

From this perspective, the value of the above thought experiment doesn't come from the answers that these imagined heroes provide. The true value comes from the process we performed to generate those answers. And I don't think this is something I really understood when I took on this project.

Summoning the Dead

So, we want to seek advice from our favorite historical figures? It turns out that prompting LLMs to do just that is actually among the simplest things one can do with an LLM. LLMs are remarkably good at picking up and adopting styles via prompting.

User: Talk like a pirate

ChatGPT : Ahoy, matey! What be ye lookin' fer on this fine day? If it be treasure or tales of the high seas, I be at yer service, savvy? Arrr, speak up, and let's set sail on an adventure! 🏴☠️

While prompting LLMs to pretend to be a character is actually pretty common-place, what I hadn't really seen was putting multiple LLM agents into a single group chat wherein you could interact with all of them, and even let them interact with each other. This the crux of the setup I sought to create.

So boom, let users set up different agents, and let them run free, right? Almost.

Dialogues

There's actually one more feature I'd like to dig into, and it's something of a subtle complexity that I didn't anticipate when approaching this project.

So I have a list of agents and each one knows how to respond to the conversation, but how do I choose which agent to respond next? One easy solution would be to pick a random agent, but I have the strong suspicion that that wouldn't feel natural.

See, in a group chat with real humans, the conversation doesn't just hop around between random people. While many messages in a group chat are sort of undirected open invitations to respond, often times messages have an intended recipient.

One approach to solve this might be to let each agent have a chance to read the message, and to pick the agent for whom the last message was most relevant. That might work, but it would require an inference call for each agent to make the determination.

Instead, I take a different approach. I take a handful of recent messages and prompt a hidden Oracle to summarize the conversation up to this point, and to make a final determination for who should respond next. It turns out this works exceedingly well.

{

'recap': 'Marcus Aurelius spoke about cultivating virtue through mindfulness, reflection, and engagement with like-minded individuals, emphasizing the importance of authentic living in alignment with one’s values.',

'next_user_reason': 'Nikola Tesla, as a renowned thinker and innovator, may have unique insights or reflections on the importance of virtues such as wisdom and courage in the pursuit of knowledge and technological advancement. His perspective could enrich the conversation.',

'next_user_name': 'Nikola Tesla',

'next_user_id': 1

}

Shown above is a sample chat summary and next-responder decision by the Oracle. Clearly the Oracle has no problem following along with this conversation and produces very reasonable recommendations for choosing who to respond next.

And that's mostly it! All in all, it works decently well, even if sometimes the agents' personalities blur together. One of the tendencies that I observe in ChatGPT is for the tone to kind of sound somewhat monotonic and a little bit emblematic of "toxic positivity", wherein it's always quick to try and put a positive spin on the conversation at hand. It gets a bit rote after some time.

This may be something that I can mitigate with a more elaborate prompt for the agents, or it might be something I'll need to remedy with a handful of prompting tricks like few-shot prompting, wherein I provide some examples of the tone and personality with which the agent should respond, or some other prompting trick. Few-shot prompting might be a great approach for historical figures with known writing styles, but it would be tricky dynamically update that tone based on the agents implied mood given the context of the conversation. All things to experiment with down the road!

Conclusion

I'm writing this post-mortem a few months later, to wrap up my thoughts on this project. I had a lot of fun on this project, and it was cool to see the different agent personalities interact with one another in a close-to natural way as the conversation flows between questions, responses, and suggestions between the agents. After some tweaking, I was even able to get the agents to seem less robotic with some additional heavy prompting, which made a world of difference to making them more fun to interact with.

In the end, I never opened this application to everyone, something about the thought of people prompt hacking to create defamatory content for some living celebrity sounded like a legal nightmare that I did not want to deal with. :)